Concolic Program Repair

Automated program repair reduces the manual effort in fixing program errors. However, existing repair techniques modify a buggy program such that it passes given tests.

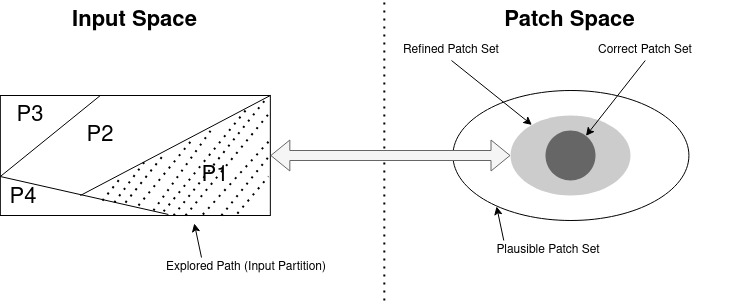

Such repair techniques do not discriminate between correct patches and patches that overfit the available tests and break untested but desired functionality. We attempt to solve this problem

with a novel solution that make use of the duality of space exploration in Input Space and Program Space. We implemented our technique in the form of a tool called CPR and evaluated its efficacy

in reducing the patch space by discarding overfitting patches from a pool of plausible patches. Similar to Cardio-Pulmonary Resuscitation (CPR) does to a patient, our tool CPR resuscitate

or recover programs via appropriate fixes. In this work, we therefore propose and implement an integrated approach for detecting and discarding overfitting patches by exploiting the relationship between the patch space and input space.

We leverage concolic path exploration to systematically traverse the input space (and generate inputs), while systematically ruling out significant parts of the patch space.

Given a long enough time budget, this approach allows a significant reduction in the pool of patch candidates, as shown by our experiments.

Automated program repair reduces the manual effort in fixing program errors. However, existing repair techniques modify a buggy program such that it passes given tests.

Such repair techniques do not discriminate between correct patches and patches that overfit the available tests and break untested but desired functionality. We attempt to solve this problem

with a novel solution that make use of the duality of space exploration in Input Space and Program Space. We implemented our technique in the form of a tool called CPR and evaluated its efficacy

in reducing the patch space by discarding overfitting patches from a pool of plausible patches. Similar to Cardio-Pulmonary Resuscitation (CPR) does to a patient, our tool CPR resuscitate

or recover programs via appropriate fixes. In this work, we therefore propose and implement an integrated approach for detecting and discarding overfitting patches by exploiting the relationship between the patch space and input space.

We leverage concolic path exploration to systematically traverse the input space (and generate inputs), while systematically ruling out significant parts of the patch space.

Given a long enough time budget, this approach allows a significant reduction in the pool of patch candidates, as shown by our experiments.

Publication

Concolic Program Repair

42nd ACM SIGPLAN Conference on Programming Language Design and Implementation (PLDI) 2021, 16 pages

Ridwan Shariffdeen, Yannic Noller, Lars Grunske, Abhik Roychoudhury(One-line Abstract) Detecting and discarding over-fitting patches via systematic co-exploration of the patch space and input space

Note: Our artifact was awarded the reusable, functional and available badges at PLDI'21

Note: Our paper is now available on ACM digital library, link

Approach

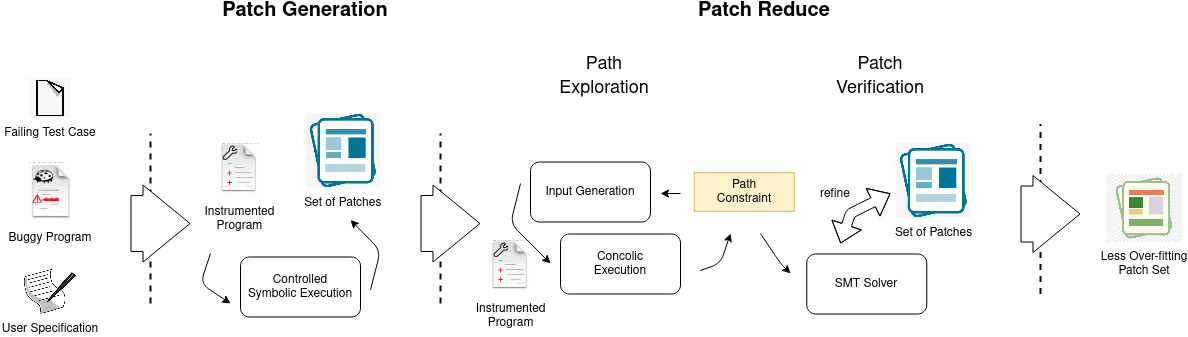

Given the buggy program, a repair budget (time limit, iteration count), the fault location(s), a user specification, the language components for the synthesis, a failing test-case and optionally, a set of initial functional(passing) test cases, CPR generate a refined set of patches that are less over-fitting. The user specification defines the desired behavior of the repaired program (in addition to satisfying the given test cases). If initial tests are available, we assume that at least one failing test is available, which our method seeks to repair, apart from making sure that the user provided specification holds for all paths traversed via concolic exploration. Finally, CPR produces a ranked set of patches based on the explored input space.

Tool

Dependencies

- LLVM - 6.0

- Clang - 6.0

- Klee - Concolic Support Enabled (Github Repo )

- Python - 3.7

Source Files

Docker Image

Dockerhub RepositoryDocker image only with the tool can be obtained using the tag 16.04

Docker image with scripts to reproduce the experiments can be obtained using the tag experiments-cpr

Evaluation Subjects

- Security Vulnerabilities: ExtractFix

- Logical Programs: SVCOMP

- Test-Driven Repair: ManyBugs

| Benchmark | Program | Repository/Link |

|---|---|---|

| ExtractFix | LibTIFF | Repo |

| ExtractFix | Binutils | Repo |

| ExtractFix | LibXML2 | Repo |

| ExtractFix | LibJPEG | Repo |

| ExtractFix | FFMpeg | Repo |

| ExtractFix | Jasper | Repo |

| ExtractFix | Coreutils | Repo |

| ManyBugs | LibTIFF | Repo |

| ManyBugs | GZip | Repo |

| SVCOMP | Insertion Sort | Link |

| SVCOMP | Linear Search | Link |

| SVCOMP | String | Link |

| SVCOMP | Eureka | Link |

| SVCOMP | Nested Loop | Link |

| SVCOMP | Sum | Link |

| SVCOMP | Bubble Sort | Link |

| SVCOMP | Unique List | Link |

| SVCOMP | Standard Array | Link |

| SVCOMP | Recursive Addition | Link |

Evaluation Results

We evaluate CPR on three benchmarks for repairing security vulnerabilities, logical errors and test-failures. We analyse the reduction ratio of the input space in terms of the number of paths we discard due to infeasibility and the reduction ratio of the patch space in terms of the number of patches removed due to overfitting. A gist of the results from our evaluation is given below, for full set of details please refer the paper.| # | Program | Bug ID | Paths | Patches | Explored | Discarded | Prune Ratio | Synthesised | Removed | Refine Ratio |

|---|---|---|---|---|---|---|---|---|

| 1 | LibTIFF | CVE-2016-5321 | 67 | 77 | 15% | 174 | 70 | 40% |

| 2 | LibTIFF | CVE-2016-9273 | 10 | 2 | 2% | 260 | 119 | 46% |

| 3 | LibTIFF | CVE-2016-3623 | 102 | 21 | 12% | 130 | 30 | 23% |

| 4 | Binutils | CVE-2018-10372 | 25 | 1 | 0.1% | 74 | 35 | 47% |

| 5 | LibXML2 | CVE-2012-5134 | 80 | 271 | 10% | 260 | 129 | 48% |

| 6 | LibJPEG | CVE-2018-19664 | 84 | 26 | 4% | 130 | 130 | 0% |

| 7 | Jasper | CVE-2016-8691 | 69 | 7 | 9% | 260 | 164 | 63% |

Artifacts

We provide a replication package for our experiments with CPR on the selected benchmarks in a Docker container, which consists of setup scripts/configuration files to re-run the experiments. The Docker environment consists of all dependencies for CPR, KLEE, and other subjects in the benchmarks. For each experiment, we also provide the logs and other artifacts (i.e., generated patches, rankings) inside an archive named 'results.tar.gz'.You can access the replication package via Dockerhub at this link (use the tag experiments-cpr) by using the following command:

docker pull rshariffdeen/cpr:experiments-cprdocker build -t experiments-cpr .